Let’s talk about BrickSeek Scraper.

You might have stumbled across this gem if you’ve ever hunted for a deal online. BrickSeek is like your personal deal detective, scouring the web to find the best product prices across various retailers. Originally designed to help shoppers find the best prices on items at local stores, it’s grown into a powerful tool for anyone looking to save a buck or two—or more.

BrickSeek’s magic lies in its real-time ability to track prices and inventory. Whether you’re a bargain hunter, a reseller looking to optimize inventory, or someone who loves a good deal, BrickSeek has something to offer. Imagine knowing exactly where that elusive discounted TV is in stock or finding out that the toy your kid has been begging for is half off at a store just a few miles away. That’s the power of price tracking and inventory management.

Introduction to Web Scraping

Now, let’s pivot to web scraping. It sounds techy and kind of is, but don’t let that scare you off. At its core, web scraping is about automating the process of collecting data from websites. Instead of manually checking a dozen sites for price drops or stock updates, a web scraper does the heavy lifting for you, pulling the data into a neat, usable format.

Think of it as having a super diligent assistant who never sleeps, constantly checking for updates and returning the information you need. Web scraping aims to make data collection faster, more efficient, and less tedious.

But with great power comes great responsibility. There are ethical considerations to keep in mind. Not every website wants its data scraped, and some have terms of service that explicitly forbid it. There’s also the legal aspect—scraping the wrong way can get you into hot water. So, it’s essential to know the rules and play fair. Ethical scraping means respecting website policies, not overloading servers with too many requests, and, most importantly, not using the data for nefarious purposes.

So, whether you’re a data nerd, a savvy shopper, or just someone who likes to stay ahead of the curve, understanding the ins and outs of BrickSeek and web scraping can open up a world of possibilities. And, as we’ll see, it’s not just about saving money—it’s about being smarter with your time and resources.

Section 1: Understanding BrickSeek

What is BrickSeek?

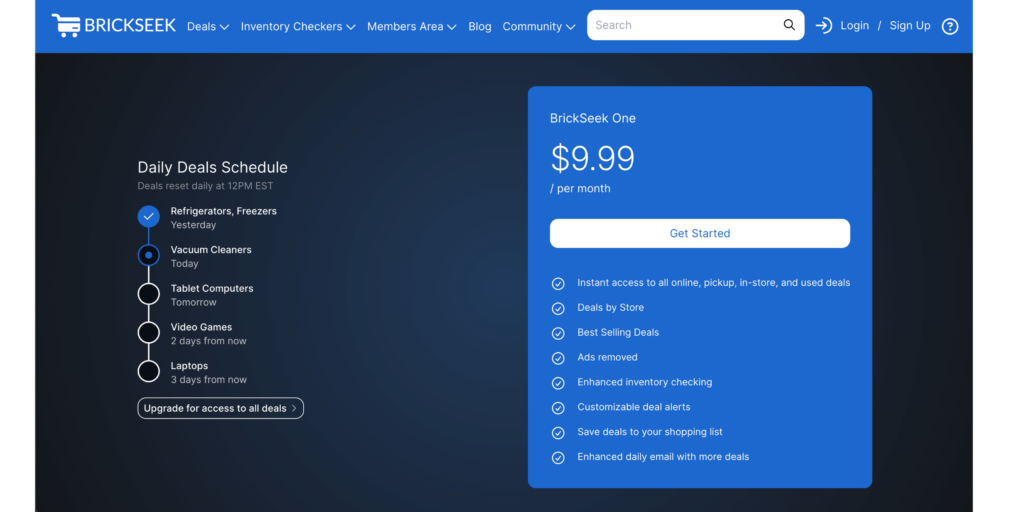

BrickSeek is a price-tracking and inventory-checking tool that has made a name among bargain hunters and savvy shoppers. Launched with the idea of helping people find the best deals in local stores, it has evolved into a robust platform offering a variety of features that cater to a wide audience.

History and Development:

BrickSeek started as a simple inventory tracker for Walmart and has since expanded to include major retailers like Target, Lowe’s, Office Depot, and more. Its development has been driven by the growing need for consumers to make informed purchasing decisions without the hassle of visiting multiple stores or websites. Today, BrickSeek boasts a user-friendly interface and a powerful backend that collects and updates data in real time.

Key Features and Benefits:

- Price Alerts: Users can set alerts for specific products, ensuring they never miss a price drop or a back-in-stock notification.

- Inventory Tracking: Real-time updates on product availability across multiple retailers.

- Deal Finder: A curated list of trending deals and clearance items.

- Local Markdown Feed: Information on local store markdowns and clearance items.

- Online Deals: A section dedicated to online-exclusive deals and discounts.

These features help users save money and time by consolidating information that would otherwise require hours of manual searching.

Use Cases for BrickSeek

Finding Deals and Discounts: One of the primary uses of BrickSeek is to find the best deals and discounts available. Whether it’s a hot new tech gadget, household essentials, or toys for the kids, BrickSeek can help you locate the best online and in-store prices. With its price alerts and deal finder features, you can stay ahead of sales and promotions without constantly checking multiple websites.

Inventory Tracking for Resellers: Keeping inventory across different stores can be a logistical nightmare for resellers. BrickSeek simplifies this process by providing real-time inventory data. This is particularly useful for those who buy products in bulk to resell at a profit. Knowing where and when products are available allows resellers to plan their purchases more effectively and avoid stockouts.

Price Comparison for Savvy Shoppers: For those who like to ensure they’re getting the best bang for their buck, BrickSeek’s price comparison feature is invaluable. By comparing prices across multiple retailers, shoppers can ensure they’re not overpaying. This is especially useful during major shopping events like Black Friday, Cyber Monday, or back-to-school sales when prices fluctuate rapidly.

BrickSeek is a versatile tool that caters to different needs—from casual deal hunters to professional resellers. Its ability to provide accurate, real-time data makes it an essential resource for anyone looking to save money and time.

Section 2: The Basics of Web Scraping

What is Web Scraping?

Web scraping is the process of automatically extracting data from websites. This technique is commonly used to collect large amounts of data that would be time-consuming or difficult to gather manually. Using software or scripts, web scraping can automate information retrieval, making it possible to collect and analyze data at scale.

Definition and Common Applications

Web scraping involves using automated bots to visit websites, parse the HTML, and extract useful information. This can include product prices, stock availability, customer reviews, and more. The extracted data can then be stored and analyzed for various purposes.

Common applications of web scraping include

- Price Monitoring: Tracking prices across multiple websites to find the best deals.

- Market Research: Collecting data on competitors’ products, prices, and customer feedback.

- Content Aggregation: Gathering articles, blogs, or news from various sources for a comprehensive overview.

- Data Mining: Extracting large datasets for analysis in finance, healthcare, and e-commerce fields.

Benefits and Challenges

- Benefits: Web scraping can save time and resources by automating data collection. It provides up-to-date and comprehensive datasets, enabling better decision-making and strategic planning. For businesses, this means staying competitive by keeping abreast of market trends and consumer behavior.

- Challenges: Despite its advantages, web scraping has technical and ethical challenges. Websites often change their structure, which can break scraping scripts. Additionally, handling large amounts of data requires robust infrastructure and management. Moreover, ensuring that scraping activities do not violate terms of service or ethical standards is crucial.

Legal and Ethical Considerations

When it comes to web scraping, understanding the legal and ethical landscape is essential. While scraping itself is not illegal, how it is done can raise legal issues.

Understanding Terms of Service

Most websites have terms of service (ToS) that outline acceptable use policies. Scraping a website that violates its ToS can lead to legal action, especially if the scraping activity is detected and harms the website’s operations. Therefore, reviewing and complying with the ToS of any site you intend to scrape is important.

Ethical Scraping Practices

Ethical scraping involves several best practices to ensure that the process is respectful and legal:

- Respecting Robots.txt: This file on websites indicates which parts of the site can and cannot be scraped. Adhering to these guidelines is a basic ethical practice.

- Avoiding Excessive Load: Scraping can strain a website’s server. Limiting the frequency and volume of requests helps avoid overloading the site.

- Attribution and Use: If data is scraped for publication or analysis, giving proper credit to the source is essential. Additionally, it is crucial to use the data not to harm the original site or its users.

- Personal Data Protection: Scraping should avoid collecting personal data unless explicit permission is granted and data privacy regulations are followed.

In summary, while web scraping offers powerful data collection and analysis capabilities, it must be conducted responsibly. Understanding the legal implications and adhering to ethical standards are key to leveraging this technology effectively and sustainably.

Section 3: Getting Started with BrickSeek Scraping

Tools and Technologies

Embarking on a BrickSeek scraping journey requires the right tools and technologies. Here’s a rundown of the essential programming languages and libraries that will set you up for success.

Recommended Programming Languages

- Python: Widely regarded as the go-to language for web scraping due to its simplicity and the vast array of libraries available. Python’s readability and community support make it ideal for beginners and experienced developers.

Essential Libraries

- BeautifulSoup: A Python library for parsing HTML and XML documents. It creates a parse tree for parsed pages that can be used to extract data easily.

- Scrapy: An open-source and collaborative web crawling framework for Python. Scrapy is powerful and flexible, allowing you to scrape and extract data efficiently.

- Selenium: A web testing library that automates browsers. It’s especially useful for scraping dynamic content rendered by JavaScript, which traditional scraping libraries might miss.

Setting Up Your Environment

Before starting the scraping, you must set up your environment. Here’s a step-by-step guide to help you get started.

Installing Necessary Software and Libraries

- Install Python: Ensure Python is installed on your system. You can download it from python.org.

- Set Up a Virtual Environment: It’s good practice to create a virtual environment for your project to manage dependencies. You can do this using venv.

python -m venv brickseek_scraper

source brickseek_scraper/bin/activate # On Windows, use `brickseek_scraper\Scripts\activate`- Install Libraries: With your virtual environment activated, install the necessary libraries using pip.

pip install beautifulsoup4 scrapy selenium requestsBasic Setup for a BrickSeek Scraper Project

- Create a Project Directory:

mkdir brickseek_scraper

cd brickseek_scraper- Initialize a Scrapy Project: If you’re using Scrapy, initialize a new project.

scrapy startproject brickseek

cd brickseek- Setting Up Selenium: For Selenium, you’ll need to download the appropriate WebDriver for your browser (e.g., ChromeDriver for Google Chrome).

# Example for installing ChromeDriver

wget https://chromedriver.storage.googleapis.com/91.0.4472.101/chromedriver_linux64.zip

unzip chromedriver_linux64.zip

mv chromedriver /usr/local/bin/- Basic Scrapy Spider: Create a simple Scrapy spider to start scraping BrickSeek.

# brickseek/brickseek/spiders/brickseek_spider.py

import scrapy

class BrickseekSpider(scrapy.Spider):

name = "brickseek"

start_urls = ["https://brickseek.com/"]

def parse(self, response):

self.log('Visited %s' % response.url)- Running Your Spider: Execute the spider to see if everything is set up correctly.

scrapy crawl brickseekBy setting up your environment with these tools and technologies, you’re ready to start scraping data from BrickSeek. This preparation ensures you have a solid foundation for building more complex scraping tasks and handling the data efficiently.

Section 4: Building Your BrickSeek Scraper

Step-by-Step Guide to BrickSeek Scraper

Building a BrickSeek scraper involves several steps, from identifying the target data to writing the code that extracts this information. Let’s break it down.

Identifying the Target Data (URLs, HTML Elements):

- Start by Exploring BrickSeek: Visit BrickSeek and navigate to the pages you want to scrape. Look at the URL patterns, product pages, and the structure of the HTML.

- Use Developer Tools: Open your browser’s developer tools (usually with F12 or right-clicking and selecting “Inspect”). Inspect the HTML elements that contain the data you’re interested in (like prices, inventory status, and product details).

Writing a Basic Scraper with BeautifulSoup/Scrapy

- Using BeautifulSoup:

import requests

from bs4 import BeautifulSoup

# Define the URL of the page to scrape

url = 'https://brickseek.com/deal/'

# Send a GET request to the URL

response = requests.get(url)

# Parse the HTML content using BeautifulSoup

soup = BeautifulSoup(response.text, 'html.parser')

# Extract data (e.g., prices, inventory, product details)

deals = soup.find_all('div', class_='item')

for deal in deals:

title = deal.find('h2').text.strip()

price = deal.find('span', class_='price').text.strip()

print(f'Title: {title}, Price: {price}')- Using Scrapy:

import scrapy

class BrickseekSpider(scrapy.Spider):

name = "brickseek"

start_urls = ['https://brickseek.com/deal/']

def parse(self, response):

deals = response.css('div.item')

for deal in deals:

title = deal.css('h2::text').get().strip()

price = deal.css('span.price::text').get().strip()

yield {

'title': title,

'price': price

}Handling Dynamic Content

Sometimes, the content you need is loaded dynamically using JavaScript. Here’s how to handle it.

Using Selenium for JavaScript-Rendered Content:

- Setup Selenium:

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.chrome.service import Service

from selenium.webdriver.chrome.options import Options

chrome_options = Options()

chrome_options.add_argument("--headless")

service = Service('path/to/chromedriver')

driver = webdriver.Chrome(service=service, options=chrome_options)

driver.get('https://brickseek.com/deal/')

deals = driver.find_elements(By.CLASS_NAME, 'item')

for deal in deals:

title = deal.find_element(By.TAG_NAME, 'h2').text.strip()

price = deal.find_element(By.CLASS_NAME, 'price').text.strip()

print(f'Title: {title}, Price: {price}')

driver.quit()Techniques for Dealing with AJAX and Other Dynamic Elements

Wait for Elements to Load: Use explicit waits to ensure that elements have loaded before attempting to extract data.

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

driver.get('https://brickseek.com/deal/')

WebDriverWait(driver, 10).until(

EC.presence_of_element_located((By.CLASS_NAME, 'item'))

)

deals = driver.find_elements(By.CLASS_NAME, 'item')

for deal in deals:

title = deal.find_element(By.TAG_NAME, 'h2').text.strip()

price = deal.find_element(By.CLASS_NAME, 'price').text.strip()

print(f'Title: {title}, Price: {price}')Handling Infinite Scrolling: For pages with infinite scrolling, you may need to scroll down to load more content.

import time

driver.get('https://brickseek.com/deal/')

last_height = driver.execute_script("return document.body.scrollHeight")

while True:

driver.execute_script("window.scrollTo(0, document.body.scrollHeight);")

time.sleep(2)

new_height = driver.execute_script("return document.body.scrollHeight")

if new_height == last_height:

break

last_height = new_height

deals = driver.find_elements(By.CLASS_NAME, 'item')

for deal in deals:

title = deal.find_element(By.TAG_NAME, 'h2').text.strip()

price = deal.find_element(By.CLASS_NAME, 'price').text.strip()

print(f'Title: {title}, Price: {price}')By following these steps, you can build a robust BrickSeek scraper that effectively handles static and dynamic content and extracts valuable data seamlessly.

Section 5: Enhancing Your Scraper

To improve your BrickSeek scraper, you must incorporate advanced techniques that ensure its efficiency and longevity. This section covers proxies to mask your identity and avoid bans and methods for mimicking human behavior to stay under the radar.

Implementing Proxies

Why Use Proxies?

Proxies are essential for web scraping, especially when targeting sites with strict anti-scraping measures. Here’s why you should consider using proxies:

- Anonymity: Proxies hide your IP address, making your requests appear from different locations.

- Avoiding Bans: Using multiple proxies can distribute your requests, reducing the chance of being blocked for excessive traffic.

- Accessing Geo-Restricted Content: Proxies can make your requests appear as if they are coming from a specific country, allowing you to access region-locked data.

Setting Up IPBurger Proxies for Your Scraper

Using IPBurger proxies is straightforward. Here’s how to integrate them into your BrickSeek scraper:

- Sign Up for IPBurger:

- Visit IPBurger’s website and sign up for a proxy plan that fits your needs.

- Obtain your proxy details, including IP address, port, username, and password.

- Configure Proxies in Your Code:

With Requests and BeautifulSoup:

import requests

from bs4 import BeautifulSoup

# Proxy details

proxies = {

'http': 'http://username:password@proxy_ip:proxy_port',

'https': 'https://username:password@proxy_ip:proxy_port'

}

url = 'https://brickseek.com/deal/'

response = requests.get(url, proxies=proxies)

soup = BeautifulSoup(response.text, 'html.parser')

deals = soup.find_all('div', class_='item')

for deal in deals:

title = deal.find('h2').text.strip()

price = deal.find('span', class_='price').text.strip()

print(f'Title: {title}, Price: {price}')- With Scrapy:

import scrapy

class BrickseekSpider(scrapy.Spider):

name = "brickseek"

start_urls = ['https://brickseek.com/deal/']

def start_requests(self):

for url in self.start_urls:

yield scrapy.Request(url=url, callback=self.parse, meta={

'proxy': 'http://username:password@proxy_ip:proxy_port'

})

def parse(self, response):

deals = response.css('div.item')

for deal in deals:

title = deal.css('h2::text').get().strip()

price = deal.css('span.price::text').get().strip()

yield {

'title': title,

'price': price

}Avoiding Bans and Rate Limits

You must implement techniques that mimic human browsing behavior to prevent your scraper from being detected and banned.

Techniques for Mimicking Human Behavior

- Randomize User Agents:

- Rotate user-agent strings to make requests appear to come from different browsers.

import random

user_agents = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_15_7) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/91.0.4472.124 Safari/537.36',

'Mozilla/5.0 (X11; Ubuntu; Linux x86_64; rv:89.0) Gecko/20100101 Firefox/89.0',

# Add more user agents as needed

]

headers = {

'User-Agent': random.choice(user_agents)

}response = requests.get(url, headers=headers, proxies=proxies)

- Implementing Delays and Random Intervals:

- Introduce delays between requests to mimic natural browsing patterns.

- Use random intervals to avoid creating a predictable pattern.

import time

deals = soup.find_all('div', class_='item')

for deal in deals:

title = deal.find('h2').text.strip()

price = deal.find('span', class_='price').text.strip()

print(f'Title: {title}, Price: {price}')

# Random delay between requests

time.sleep(random.uniform(1, 3))By integrating proxies and simulating human behavior, you can significantly enhance the effectiveness and longevity of your BrickSeek scraper, ensuring it runs smoothly and undetected for extended periods.

Section 6: Data Management and Storage

Once you’ve successfully scraped data from BrickSeek, the next crucial step is managing and storing this data efficiently. This section will guide you through different methods of storing your scraped data and how to clean and format it for further analysis.

Storing Scraped Data

Options for Storing Data

Depending on the volume and complexity of the data, you have several options for storing your scraped data:

- CSV Files:

- Simple and easy to use for smaller datasets.

- Compatible with most data analysis tools and software.

- Ideal for quick analysis and visualization.

- Databases:

- More suitable for larger datasets and more complex queries.

- Options include SQLite for local storage and MySQL or PostgreSQL for more robust, scalable solutions.

- Provides better data integrity and easier access for analysis.

- Cloud Storage:

- For large-scale scraping projects that require distributed storage solutions.

- Options include AWS S3, Google Cloud Storage, or Azure Blob Storage.

- Offers high availability and scalability.

Writing Data to Files

Here’s how you can write your scraped data to a CSV file or a database:

Writing to a CSV File:

import csv

data = [

{'title': 'Deal 1', 'price': '$10'},

{'title': 'Deal 2', 'price': '$15'},

# Add more data as needed

]

with open('deals.csv', mode='w', newline='') as file:

writer = csv.DictWriter(file, fieldnames=['title', 'price'])

writer.writeheader()

for item in data:

writer.writerow(item)Writing to a SQLite Database:

import sqlite3

# Connect to the SQLite database (or create it if it doesn't exist)

conn = sqlite3.connect('deals.db')

cursor = conn.cursor()

# Create a table

cursor.execute('''

CREATE TABLE IF NOT EXISTS deals (

id INTEGER PRIMARY KEY,

title TEXT,

price TEXT

)

''')

# Insert data into the table

data = [

('Deal 1', '$10'),

('Deal 2', '$15'),

# Add more data as needed

]

cursor.executemany('INSERT INTO deals (title, price) VALUES (?, ?)', data)

conn.commit()

# Close the connection

conn.close()Data Cleaning and Formatting

Raw data scraped from websites often needs cleaning and formatting before being analyzed. Here are some basic techniques for preparing your data.

Basic Data Cleaning Techniques

Removing Duplicates:

- Ensure each entry in your dataset is unique.

import pandas as pd

df = pd.read_csv('deals.csv')

df.drop_duplicates(inplace=True)

df.to_csv('deals_cleaned.csv', index=False)Handling Missing Values:

- Fill in missing values or remove entries with missing data.

df = pd.read_csv('deals.csv')

df.dropna(inplace=True) # Remove rows with missing values

df.fillna('N/A', inplace=True) # Fill missing values with 'N/A'

df.to_csv('deals_cleaned.csv', index=False)Normalizing Data Formats:

- Ensure consistency in data formats (e.g., price formats, date formats).

df['price'] = df['price'].str.replace('$', '').astype(float)

df.to_csv('deals_cleaned.csv', index=False)Structuring Data for Analysis

Organizing Data into Tables:

- Structure your data into tables with clear headers and consistent data types.

- For relational databases, ensure proper normalization and relationships between tables.

Using Data Frames:

- DataFrames, like those provided by Pandas in Python, offer powerful data manipulation and analysis tools.

import pandas as pd

df = pd.read_csv('deals_cleaned.csv')

# Perform analysis and manipulationsBy effectively managing and storing your scraped data, you can ensure that it is ready for analysis and further use. Proper data handling practices will save you time and effort, making your data analysis process smoother and more efficient.

Section 7: Analyzing and Using Your Data

Once you have successfully scraped and stored your data from BrickSeek, the next step is to analyze it to extract valuable insights. This section will guide you through basic and advanced data analysis techniques to help you make the most out of your collected data.

Basic Data Analysis

Tools and Techniques for Analyzing Scraped Data

Excel/Google Sheets:

- Usage: Ideal for quick and straightforward data analysis.

- Techniques:

- Sorting and filtering data.

- Using pivot tables to summarize and explore data.

- Applying formulas and functions for calculations and transformations.

Pandas (Python Library):

- Usage: Powerful tool for data manipulation and analysis.

- Techniques:

- Reading data into DataFrames for structured analysis.

- Performing descriptive statistics (mean, median, standard deviation).

- Grouping and aggregating data to find patterns.

import pandas as pd

# Load data into a DataFrame

df = pd.read_csv('deals_cleaned.csv')

# Basic statistics

print(df.describe())

# Group by a specific column and aggregate

grouped = df.groupby('category').mean()

print(grouped)Visualization Tools (Matplotlib, Seaborn, Tableau):

- Usage: Visualize data to identify trends and patterns.

- Techniques:

- Creating line graphs, bar charts, histograms, and scatter plots.

- Using heatmaps for correlation analysis.

import matplotlib.pyplot as plt

import seaborn as sns

# Plot a histogram of prices

sns.histplot(df['price'], bins=20, kde=True)

plt.show()

# Create a bar chart

df['category'].value_counts().plot(kind='bar')

plt.show()Finding Patterns and Insights

- Trend Analysis:

- Look for trends over time, such as price fluctuations, seasonal discounts, or changes in inventory levels.

- Example: Identifying periods with the highest discounts on specific product categories.

- Comparative Analysis:

- Compare prices across different retailers to find the best deals.

- Example: Comparing the average price of a product on different platforms.

- Correlation Analysis:

- Determine relationships between different variables.

- Example: Correlating product prices with inventory levels to understand supply-demand dynamics.

Advanced Analysis

Using Machine Learning for Predictive Insights

Predictive Modeling:

- Apply machine learning algorithms to predict future trends based on historical data.

- Example: Predicting future prices of products based on past data using regression models.

from sklearn.model_selection import train_test_split

from sklearn.linear_model import LinearRegression

# Prepare data for modeling

X = df[['feature1', 'feature2']] # Replace with relevant features

y = df['price']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)

# Train a regression model

model = LinearRegression()

model.fit(X_train, y_train)

# Make predictions

predictions = model.predict(X_test)Clustering:

- Use clustering algorithms to segment products into categories based on similarities.

- Example: Grouping products by price range, category, and popularity to identify distinct clusters.

from sklearn.cluster import KMeans

# Prepare data for clustering

features = df[['feature1', 'feature2']] # Replace with relevant features

# Perform K-means clustering

kmeans = KMeans(n_clusters=3, random_state=42)

df['cluster'] = kmeans.fit_predict(features)Integrating with Other Data Sources for Richer Analysis

- Combining Data Sets:

- Integrate BrickSeek data with other data sources like sales data, customer reviews, or competitor prices for a comprehensive analysis.

- Example: Combining BrickSeek price data with sales performance data to analyze the impact of pricing strategies on sales.

- APIs and Databases:

- Use APIs to fetch additional data or connect to external databases for more extensive analysis.

- Example: Using e-commerce APIs to fetch real-time data on product availability and reviews.

- Data Visualization Dashboards:

- Create interactive dashboards using tools like Tableau, Power BI, or Plotly Dash to visualize integrated data and monitor key metrics.

- Example: Building a real-time dashboard to track price trends, inventory changes, and competitor pricing.

These basic and advanced data analysis techniques can uncover valuable insights from your BrickSeek data, allowing you to make informed decisions, optimize your strategies, and stay ahead in the competitive market.

Section 8: Troubleshooting and Optimization

Building a BrickSeek scraper can be rewarding, but like any complex task, it comes with its own challenges. This section will help you troubleshoot common issues and optimize your scraper for the best performance, ensuring that you get the most out of your data extraction efforts.

Common Issues and Solutions

Handling Common Errors and Issues

- HTTP Errors (404, 500, etc.):

- Problem: You might encounter HTTP errors during scraping, such as 404 (Not Found) or 500 (Internal Server Error).

- Solution: Implement error handling in your scraper to manage these errors gracefully. Use try-except blocks to catch exceptions and retry the request after a short delay.

import requests

from time import sleep

url = 'http://example.com'

for attempt in range(5):

try:

response = requests.get(url)

response.raise_for_status() # Raises an HTTPError for bad responses

break # Exit loop if request is successful

except requests.exceptions.RequestException as e:

print(f'Error: {e}, retrying...')

sleep(5)- CAPTCHA and Anti-Bot Measures:

- Problem: Websites often use CAPTCHAs and other anti-bot mechanisms to prevent scraping.

- Solution: Use IP rotation with proxies, and consider integrating CAPTCHA-solving services if necessary. Implement delays and random intervals between requests to mimic human behavior.

import random

from time import sleep

delay = random.uniform(2, 5) # Random delay between 2 and 5 seconds

sleep(delay)- JavaScript-Rendered Content:

- Problem: Some content on BrickSeek might be rendered using JavaScript, which static scrapers like BeautifulSoup cannot handle.

- Solution: Use Selenium to handle JavaScript-rendered content.

from selenium import webdriver

driver = webdriver.Chrome()

driver.get('http://example.com')

content = driver.page_source- Blocked IP Addresses:

- Problem: Your IP address might get blocked due to frequent requests.

- Solution: Use IPBurger proxies to rotate IP addresses and avoid detection.

proxy = "http://proxyserver:port"

proxies = {

"http": proxy,

"https": proxy,

}

response = requests.get(url, proxies=proxies)Optimizing Your Scraper for Performance

- Efficient Data Extraction:

- Minimize the data you request by targeting specific HTML elements and attributes.

from bs4 import BeautifulSoup

soup = BeautifulSoup(response.text, 'html.parser')

price = soup.find('span', {'class': 'price'}).text- Concurrent Requests:

- Use asynchronous libraries like aiohttp or multithreading to make concurrent requests and speed up data extraction.

import asyncio

import aiohttp

async def fetch(session, url):

async with session.get(url) as response:

return await response.text()

async def main(urls):

async with aiohttp.ClientSession() as session:

tasks = [fetch(session, url) for url in urls]

return await asyncio.gather(*tasks)

urls = ['http://example.com/page1', 'http://example.com/page2']

loop = asyncio.get_event_loop()

pages = loop.run_until_complete(main(urls))- Caching and Throttling:

- Implement caching to avoid redundant requests for the same data, and throttle requests to prevent server overload.

from cachetools import TTLCache

cache = TTLCache(maxsize=100, ttl=300)

def get_page(url):

if url in cache:

return cache[url]

response = requests.get(url)

cache[url] = response.text

return response.textAdvanced Tips and Tricks

Expert Tips for Making the Most of Your BrickSeek Scraper

- Dynamic User Agents:

- Rotate user agents to simulate different browsers and reduce the likelihood of getting blocked.

import random

user_agents = [

'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/58.0.3029.110 Safari/537.3',

'Mozilla/5.0 (Macintosh; Intel Mac OS X 10_11_6) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/56.0.2924.87 Safari/537.36',

# Add more user agents

]

headers = {'User-Agent': random.choice(user_agents)}

response = requests.get(url, headers=headers)- Session Persistence:

- Use session objects to persist cookies and headers across multiple requests, which can help maintain login sessions and reduce detection.

session = requests.Session()

session.headers.update({'User-Agent': random.choice(user_agents)})

response = session.get(url)Continuous Improvement Strategies

- Regular Maintenance:

- Regularly update your scraper to adapt to changes in BrickSeek’s website structure. Automated monitoring can alert you to necessary updates.

import requests

def monitor_changes(url, current_structure):

response = requests.get(url)

new_structure = response.text

if new_structure != current_structure:

print("Website structure has changed!")

# Update scraper logic here- Feedback Loop:

- Implement a feedback loop to learn from errors and performance metrics, continuously refining your scraper for better efficiency and accuracy.

import logging

logging.basicConfig(filename='scraper.log', level=logging.INFO)

def log_error(error):

logging.error(f"Error occurred: {error}")

try:

response = requests.get(url)

response.raise_for_status()

except requests.exceptions.RequestException as e:

log_error(e)Following these troubleshooting and optimization techniques ensures that your BrickSeek scraper operates smoothly, efficiently, and effectively, providing valuable data for your various needs.

Conclusion

Recap of Key Points

Building a BrickSeek scraper can open up a world of opportunities for price tracking, deal hunting, and inventory management. Throughout this guide, we’ve covered:

- Understanding BrickSeek: From its history and development to its key features and use cases.

- Basics of Web Scraping: Defining web scraping, its benefits and challenges, and the importance of ethical considerations.

- Getting Started: Tools, technologies, and setting up your environment for web scraping.

- Building Your Scraper: Step-by-step guide to writing a basic scraper, handling dynamic content, and extracting relevant data.

- Enhancing Your Scraper: Implementing proxies, avoiding bans, and mimicking human behavior to ensure smooth operation.

- Data Management: Storing, cleaning, and formatting your scraped data for analysis.

- Analyzing and Using Data: Tools and techniques for integrating basic and advanced data analysis with other data sources.

- Troubleshooting and Optimization: Handling common issues, optimizing performance, and advanced tips for improving your scraper.

Future Trends

As technology evolves, so does the landscape of web scraping and data analysis. Here are some trends to watch out for:

- Emerging Technologies in Web Scraping: The use of AI and machine learning to create more sophisticated and efficient scrapers, capable of adapting to changing website structures and extracting richer data.

- The Future of Price Tracking and Inventory Management: More advanced tools and platforms will emerge, providing real-time data and insights for dynamic pricing strategies, better inventory management, and enhanced customer experiences.

Enhancing Your Web Scraping with IPBurger

Implementing proxies is crucial in building an efficient and reliable web scraper. Proxies help you avoid IP bans, distribute requests, and maintain anonymity while scraping data. IPBurger offers a range of high-quality proxy services, including residential, mobile, and datacenter proxies, tailored to meet the needs of web scrapers.

- Security and Anonymity: IPBurger’s proxies ensure that your scraping activities remain anonymous and secure, protecting your data from being tracked or intercepted.

- Avoiding Bans: By rotating IP addresses and mimicking human behavior, IPBurger’s proxies help you avoid detection and bans, ensuring uninterrupted scraping.

- Global Reach: Access data from different geographic locations using IPBurger’s global proxy network, enhancing your ability to perform localized scraping and analysis.

Now that you understand building a BrickSeek scraper comprehensively, it’s time to implement your knowledge. Start your scraping project today and explore the benefits of automating your price tracking and inventory management. Don’t forget to leverage IPBurger’s proxy services to enhance your scraping efforts and ensure reliable and secure data collection.

We encourage you to share your experiences, challenges, and tips in the comments section. Your insights can help others in the community and contribute to the continuous improvement of web scraping techniques.

Happy scraping!