Tired of copying and pasting? Learn about the easiest ways to scrape images from the web.

Now, if you just want a screen saver or background pic, web scraping may be overkill. But it’s still a skill that transfers over to many other things. So stick around.

We’ll start with browser extensions, look at image extractors, then get into web scraping tools.

What’s image scraping anyways?

Image scraping is simply taking an image URL from a website and putting it in a database to use later.

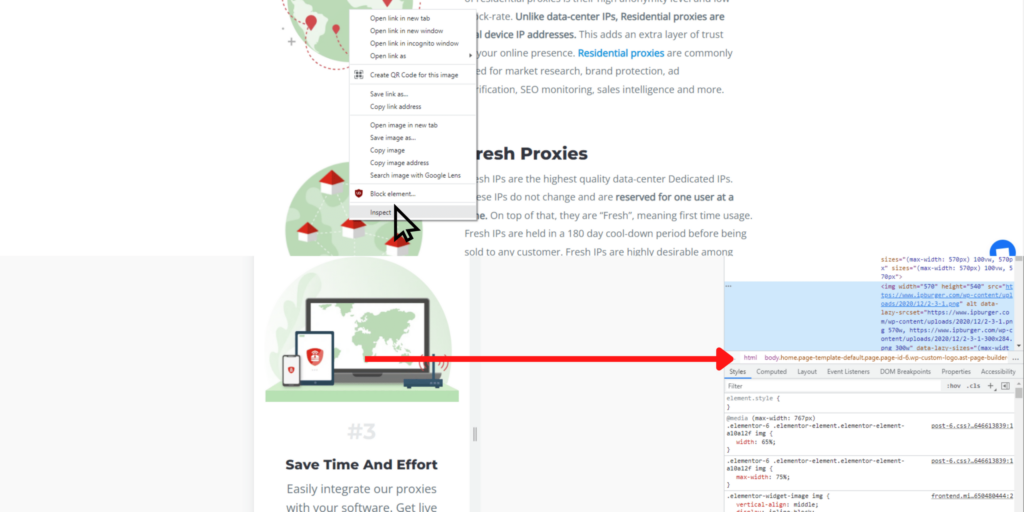

For example, if you want to save ad images from a web page, all you need to do is copy-paste the website HTML to a spreadsheet. To do that, you just right-click on the image and click on Inspect. If you’re using Chrome, you can see the developer tools pop up and focus on the HTML line for the image.

Automating this process is what most people refer to as image scraping.

Why automate image scraping?

When you need to grab a few images from a website you don’t have admin access to, you can just “save as” the specific image, and you’ll have the files on your computer.

However, if you need hundreds or thousands of images from multiple URLs, “save as” is a waste of your time. That’s where image scraping comes in. Instead of clicking the same buttons over and over, you can use scripts to automate the process for you–reducing thousands of clicks to just a few.

Types of image scrapers.

There are several ways to scrape images. Even though all of them are quicker and easier than manually saving each image, one by one, onto your computer, they work differently from each other.

So we have first to define each type of scraping method.

Browser extensions.

With browser extensions, you have to install the plugin and click on the images you want. It’s a lot faster than saving individual files, but it’s still pretty hands-on, as you can imagine.

Browser extensions are a dime a dozen, and if you’ve seen one, you’ve seen them all. You just have to find one that works on your browser, whether that’s Firefox, Chrome, or…(does anyone use anything else?)

Here are a few favorites:

- Click Image Downloader

- Double-click Image Downloader

- Loadify

Image extractors.

Image extractors are like tractors that harvest images. It’s a lazy metaphor (aside from having eight of the same letters)..but these programs make it easy to load up on images. Usually, you just have to load the URL, and you can instantly scrape all the images on the page.

This method of collecting images from the web is only suitable for smaller projects because you can only scrape one site at a time.

You can find image extractors specific for Reddit, Youtube, or Twitch like SocialSnapper, or try out a more general extraction software like Image Cyborg or Unsplash Bulk Downloader.

Web scraping tools.

Alright. These are the big guns. Scrape thousands of images–and anything else–from hundreds of web pages without breaking a sweat.

Web scraping tools is an umbrella term for all kinds of data collection automation [software] that crawls, scrapes, analyzes, formats, and stores web data. You can do it yourself on a headless browser–using open-source scripts in your command prompt–or opt for web scraping APIs that simplify the process, presenting quick commands on a graphical user interface(GUI).

If you have programming skills, the Python library is a favorite. However, there are drawbacks to doing it yourself.

- Technical issues: A lot can go wrong when you’re scraping websites. If you’re not familiar with the programs and scripts you’re using, troubleshooting can eat up a lot of time.

- Legal issues: Web scraping is legal, but there are cases (like real ones in courtrooms) where the prosecution wins claims of privacy infringement or scraping negligence that lead to property damage.

- Data quality (or lack thereof): You may not even be aware of the difference between good and bad quality data. But if you don’t have any experience with web scraping, chances are that the quality needs improvement.

- Inefficient: If you don’t know what you’re doing, it’s first going to take time to figure that out. Then once you get going, you have to figure out everything else. After doing it for years, you might be close to doing it efficiently.

- Costly: Doing it yourself or in-house may seem like the most affordable option, and if you’re just scraping as a hobby, it can be. On the other hand, if web scraping is a business cost, you make a better trade for time using a professional service.

Our two most significant recommendations are Octoparse and Parsehub because they have free plans and tons of tutorials to build your scraping skillset. With both, you can quickly learn how to use their software efficiently and economically. All you have to do is download their software and follow their on-ramping tutorial.

Easier image scraping with proxies.

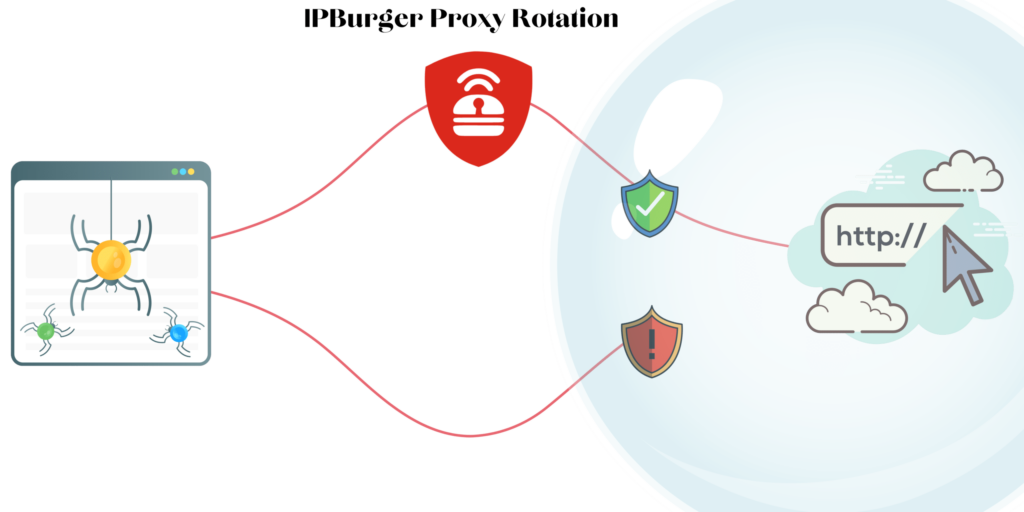

The most common snag when it comes to scraping images is when websites confuse you for a hacker or some other malignant entity. This is because web scraping can look like an attack if you send too many consecutive requests from the same IP address.

So to pacify website security, you’ll want to send requests to the URL from hundreds of different IP addresses. To do this, you employ rotating residential proxies, which make it seem like ordinary users are sending requests instead of one busy-body riddling their website with thousands of requests per second.

They’re effortless to set up using IPBurger’s intuitive proxy manager. All you do is set your parameters–location, internet service provider, and web protocol–and then generate a list of proxies from over 75 million residential IP addresses. Then you need to plug in the proxies to the web scraper, and that’s it.

Do you need proxies for a simple image scraper?

Not in the way you need them for more robust web scrapers, but there are many other use cases for high-quality residential proxies.

And if you one day decide to scale your data collection efforts and image extractors aren’t cutting it–you’re locked and loaded for uninterrupted web scraping.