What kind of file format do you use for web scraping? The answer is a little complex, so this guide simplifies the most common types for you.

Do you ever look under the hood of a website? Try pressing F12 on your keyboard (don’t freak out).

If you’re using Chrome, the developer tools will pop up and give you a glimpse at the complexity that underlies all this easy-to-read cointent.

This is the stuff you’re actually scraping from websites.

JavaScript, hypertext markup language, PHP, and tonnes of other languages computers use to transfer and display data.

Consider this post an attempt to narrow down the languages you need to know to scrape the web.

What’s a file format?

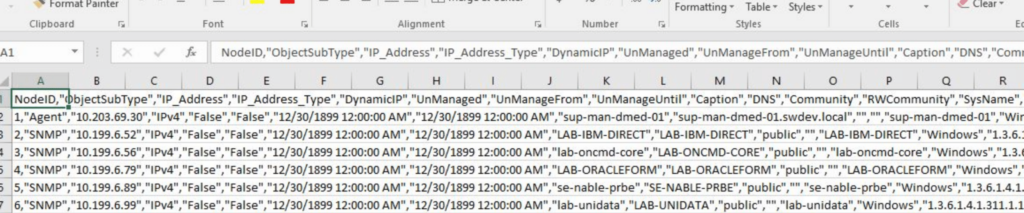

A file format is a structural map that tells a program how to display and store its contents. It specifies whether the file is binary or not and shows you how to organize the data—for example, CSV stores plain text in tables.

By looking at the file extension, you can identify the type of file format.

For example, if you save a file as “document” in CSV format, it appears as “document.csv.” When you open it, you can see the data in tabular form.

What’s a spreadsheet file format?

A spreadsheet uses numbers and letters to organize and label a document’s grid of cells into rows and columns. A spreadsheet file format is the organization and storage of data in those cells.

Some common spreadsheet file formats are Comma Separated Values (.csv), Microsoft Excel Spreadsheet (.xls), and Microsoft Excel Open XML Spreadsheet (.xlsx).

What’s the difference between binary and text file formats?

Have you ever tried to open a jpeg in Notepad?

It’s a mess.

That’s because jpeg is a binary file format that isn’t readable by humans and needs a computer to unpack it. Text file formats, on the other hand, are readable by humans.

Binary files are faster to transfer because you don’t need to parse anything. The downside is that you can’t easily edit binary files. You need to include the old and new read+write functions and embed a version number in the file.

You can edit a text file anytime without problems, but organizing the files makes the functionality slower.

Choosing the correct file format.

When you set out on a data collection project, you need to consider the format, how you want to present it, and how you’re going to store it.

Here are some other vital factors:

- What formats do you and your clients typically use?

- What software is compatible with your hardware?

- How do you plan to analyze, sort, and store your data?

- What file formats are easiest to share?

- How will you open and read your data in the future?

Popular Data Formats for Web Scraping

There are countless forms of data. Some are more suitable for long-term storage like ORC and Parquet, while others are better for data transfer between computers.

For web scraping, you want to be able to find, gather, analyze and store data.

Comma Separated Value file format (.csv)

The most common format is a CSV format–most people know how it works.

CSV works well for two-dimensional data (rows and columns). Still, a lot of data that we encounter is in multiple dimensions and doesn’t work well in a two-dimensional spreadsheet.

One drawback of CSV is its inflexibility for variations in the number of columns for each row in the CSV.

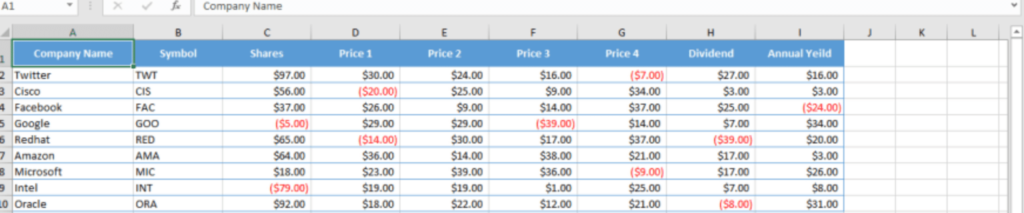

Microsoft Excel Open XML file format (.xls or .xlsx)

Excel is a spreadsheet file format that is a good option for processing human-readable data. The data must be flat (two-dimensional), and it’s better to use this format with smaller data sets or exploratory analysis.

Excel files also contain a lot of extra information such as graphs, charts, formatting, formulas, and pictures.

XLS and XLSX are a little difference. Mainly, XLS was the default file format for Excel between 1997 and 2003 while XLSX is the most recent file format from 2007 onwards.

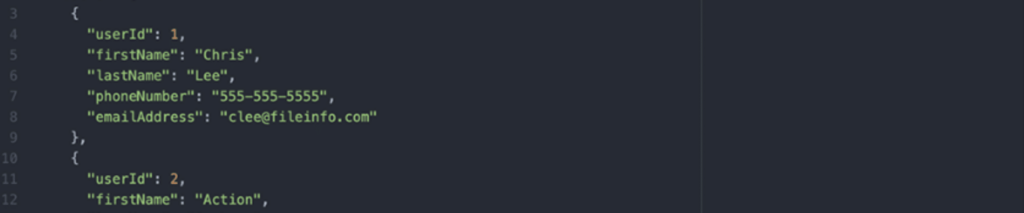

JavaScript Object Notation (.json)

JSON is a widely-adopted lightweight format. As a text-based tool, it’s easy for humans to read and write, but it can be challenging to read if there are many nested fields. It is easy for machines to parse as well.

JSON is great for small data sets, landing data, or API integration. If you need to process large amounts of data, it’s better to convert to a more efficient format.

It can easily handle multi-dimensional and semi-structured data, and you can easily add or remove any fields.

Most databases and languages support or have readily available libraries for importing and exporting JSON.

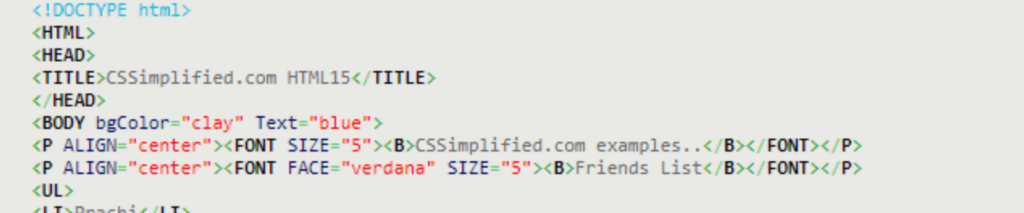

HyperText Markup Language file format (.html)

Markup language consists of invisible letters and symbols that instruct the visible page content it marks. In other words, we use HTML to describe web page structure by annotating it with tags (letters and symbols).

Unlike XML, where you can make up your own markup language from a framework, you cannot make up your own HTML tags. HTML comes with a predefined set of commands.

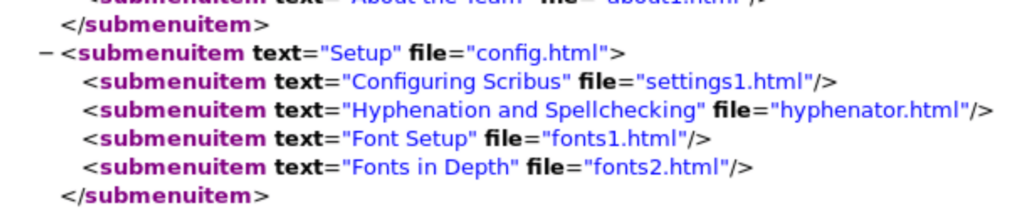

Extensible Markup Language file format (.xml)

XML is another markup language that we can use to transfer data between computers. Like HTML, it is a text-based file format readable by both humans and computers.

The main difference is that you can have more flexibility in defining web page structures because you can make up custom commands.

What’s a suitable file format for web scraping?

Even though CSV is more popular, the most universal and flexible format for web scraping is JSON. Other formats have more challenges and customizations, typically making them more resource-exhaustive.

You can easily display CSV files in Microsoft Excel spreadsheets, usually by right clicking the files and opening it in Excel. This makes it ideal for organizing and presenting the data.

We hope this adds some context to your data collection strategy. If you’re ready for more, you can begin learning how to choose the right web scraping tool for your projects.